Jan 13

/

James Kavanagh

Avoiding the 5 biggest mistakes writing AI Governance policies

How to write effective AI Governance policies while avoiding the worst mistakes that could undermine the success of your governance initiatives.

I've spent the last few months poring over AI governance, risk and use policies. Some published, some private internal copies from enterprises, public sector agencies and small businesses. I've spoken to dozens of practitioners to validate my thinking and refine what I thought I knew about what makes governance actually work.

Write your awesome label here.

I've turned all of that into training and policy templates now available through the Writing AI Governance Policies course. Along the way, I've identified a host of good and bad practices. What works, what doesn't, and what looks good on paper but falls apart in practice. The course includes fully worked templates for an AI Governance Charter, AI Governance Policy, AI Risk Management Policy, and AI Use Policy, plus a streamlined single policy for smaller organisations that adopt AI tools rather than build them. Each template comes with detailed walkthroughs explaining not just what to write, but why each element matters.

But before you dive into that, I want to share what I've learned. These are the top five worst practices I've found in real policies, in reverse order. There are plenty more covered in the course, but these are the ones that cause the most damage.

Mistake #5.

Policies that don't match organisational scale

I've seen thirty-person professional services firms try to implement governance frameworks designed for enterprises with thousands of employees. The results are predictable: confusion, overhead nobody can sustain, and governance that exists on paper while actual AI use proceeds unchecked.

The problem isn't that smaller organisations don't need governance. They absolutely do. The risks don't disappear just because you're smaller. The problem is that a three-policy architecture with tiered committees, separate mechanism owners, and elaborate documentation hierarchies doesn't work when the same person handles IT, compliance, and half a dozen other responsibilities.

What happens? Committees that never meet. Roles that exist in name only. Documentation created once and never updated. This is worse than simpler governance, because it creates false confidence. Leadership believes governance is in place while actual AI use proceeds without meaningful oversight.

The solution is right-sizing governance to your situation. That's why the course provides templates for both medium-sized organisations and smaller ones. The minimal policy preserves everything essential: guiding principles, clear accountability, risk-based classification, explicit guidance on acceptable and prohibited uses, human oversight, incident reporting. What's simplified is the structure. One AI Governance Lead replaces multiple tiers of committees. Streamlined processes replace extensive cross-references.

Critically, both templates are consistent. If you start small and grow, you can evolve toward the fuller architecture without starting over.

Minimum viable policy and guidance within a streamlined AI Governance Policy for small, AI adopter organisations

Mistake #4.

Policies that try to serve everyone, end up serving no one

This might be the most common mistake I've encountered. Organisations create a single policy document that tries to describe governance mechanisms, hierarchy of decision-making, risk assessment, and user guidance all at once. It does a poor job of all of them.

The symptoms are obvious once you know what to look for. The policy opens with high-level principles aimed at the board, shifts into procedural detail only specialists could follow, then pivots to practical guidance for frontline employees. The tone is inconsistent. Nobody knows which parts apply to them.

The root cause is a failure to recognise that governance policies and use policies serve fundamentally different purposes. A governance policy establishes your framework: the principles, structures, and accountability that define how your organisation will oversee AI. It's strategic, aimed at leadership, and should be relatively stable.

A use policy is operational. It translates your framework into daily guidance for everyone. What's encouraged, what's allowed with controls, what's prohibited. It needs to be accessible to any employee while remaining specific enough to provide genuine guidance.

When you combine these functions, you compromise both. Your governance framework becomes buried in operational detail. Your user guidance becomes wrapped in governance language that makes it impenetrable.

That's why the course separates Use Policies from Governance Policies. Each serves a distinct purpose for a distinct audience. Each can evolve at its own pace.

Structure, accountability and mechanisms in the AI Governance Policy

Practical guidance for user behaviour in the AI Use Policy

Mistake #3.

Policies that create a governance island

Here's a scenario I've encountered multiple times. An organisation invests heavily in a comprehensive AI governance framework. They create thoughtful policies, establish committees, define roles and responsibilities. And then they build it all as a standalone structure that doesn't connect to anything else in the organisation.

The result is a governance island. AI governance operates in parallel to your existing data protection, IT security, procurement, and HR policies rather than integrating with them. You have two governance systems: one for everything else, and a special one for AI.

This is unsustainable. The same AI system your governance committee reviews also touches data protection requirements, security controls, procurement processes, and employment practices. When these systems don't talk to each other, gaps emerge. Nobody is quite sure which framework applies.

It also wastes organisational muscle memory. Your people already know how existing governance works. Making them learn entirely new systems for AI creates unnecessary friction.

The alternative is integration, not reinvention. That's why the course includes a complete module on integrating AI into existing frameworks: updating HR policies for AI-assisted hiring, strengthening IT security for shadow AI risks, enhancing procurement with AI-specific vendor assessments, updating incident management for distinctive AI failure modes, and revising customer terms for transparency about AI use.

The principle is connecting AI governance to your existing fabric, creating one coherent system rather than parallel structures.

Mistake #2.

Policies that promise everything and commit to nothing

I've lost count of how many AI governance policies I've read that are full of beautiful principles and completely empty of meaningful commitments. They describe values like fairness, transparency, and accountability in soaring language. They declare commitment to responsible AI. And then they stop.

No specifics about what those principles mean in practice. No clear ownership for ensuring they're upheld. No measurable commitments anyone could be held accountable for. Just words on a page.

This is ethics-washing dressed up as governance. It creates the appearance of responsible AI without the substance. Leadership sees a policy document and believes governance is in place. Employees see principles and assume someone is ensuring they're followed. Nobody is.

Effective governance requires grounding principles in concrete commitments. Not "we value transparency" but "we will provide clear documentation of how AI systems make decisions that affect customers, with specific content requirements and review processes."

Write your awesome label here.

This is where the Principles Canvas comes in. It's a tool that maps from organisational values through principles to explicit "we will" commitments and the evidence that demonstrates them. It forces the conversation from abstract ideals to concrete actions. What exactly are we committing to do? Who owns that commitment? How will we know if we're doing it?

Those commitments then flow through your governance policy. Every principle is grounded in specific actions. Every action has an owner. The policy becomes a document that means something.

You can learn how to turn organisational values and principles into specified commitments using the AI Career Pro Principles Canvas within Course 2, Module 7 of the Program.

Mistake #1

Policies That Don't Assert the Mechanisms That Make Governance Real

And finally, the most fundamental mistake: policies that describe high-level intent but don't ground any of it in operational mechanisms.

Here's the thing. Good intentions never work. You need good mechanisms to make anything happen. A policy might require teams to test their models for bias. It might even provide detailed instructions and metrics. But it still depends on teams remembering, caring, and executing correctly under pressure.

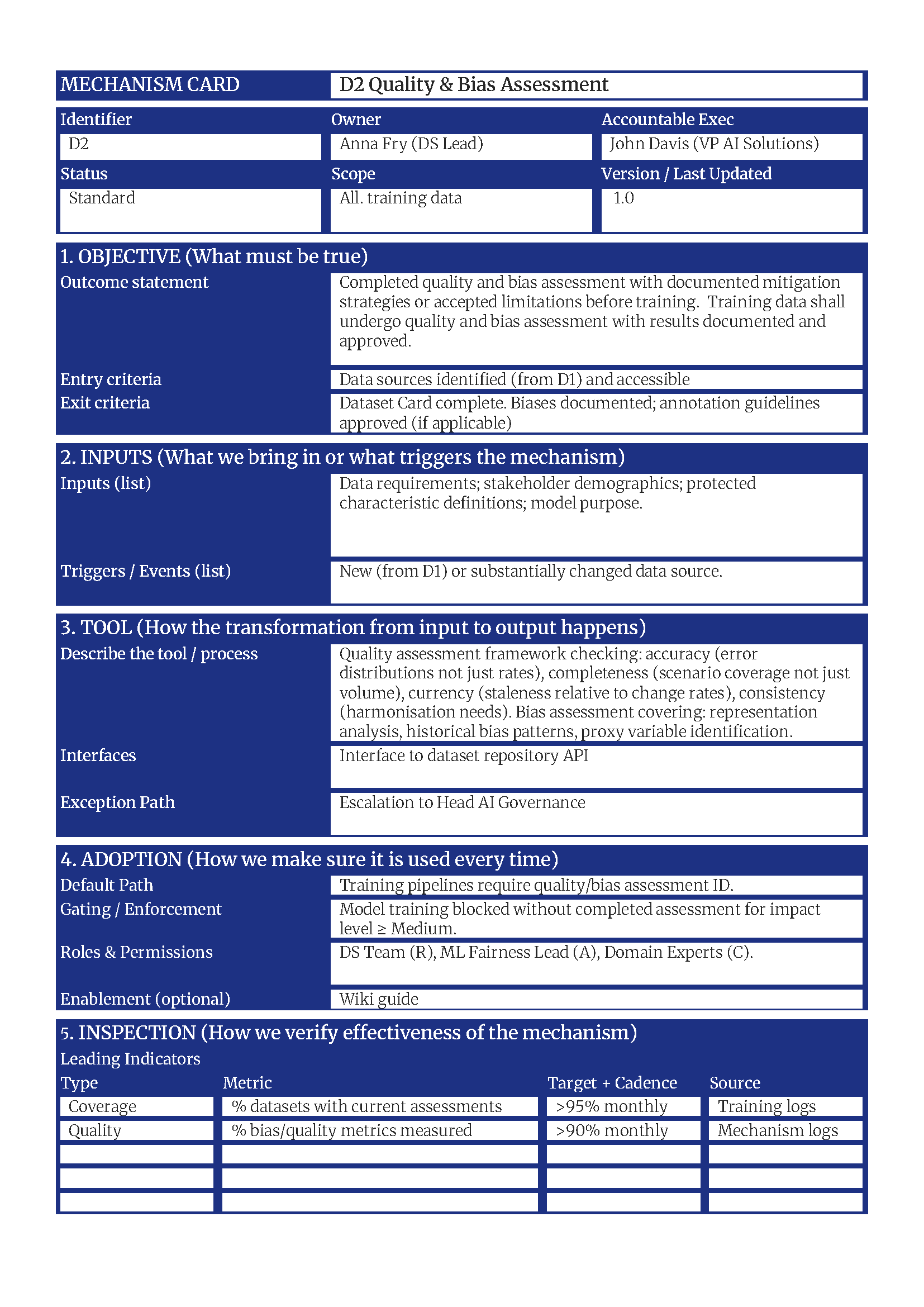

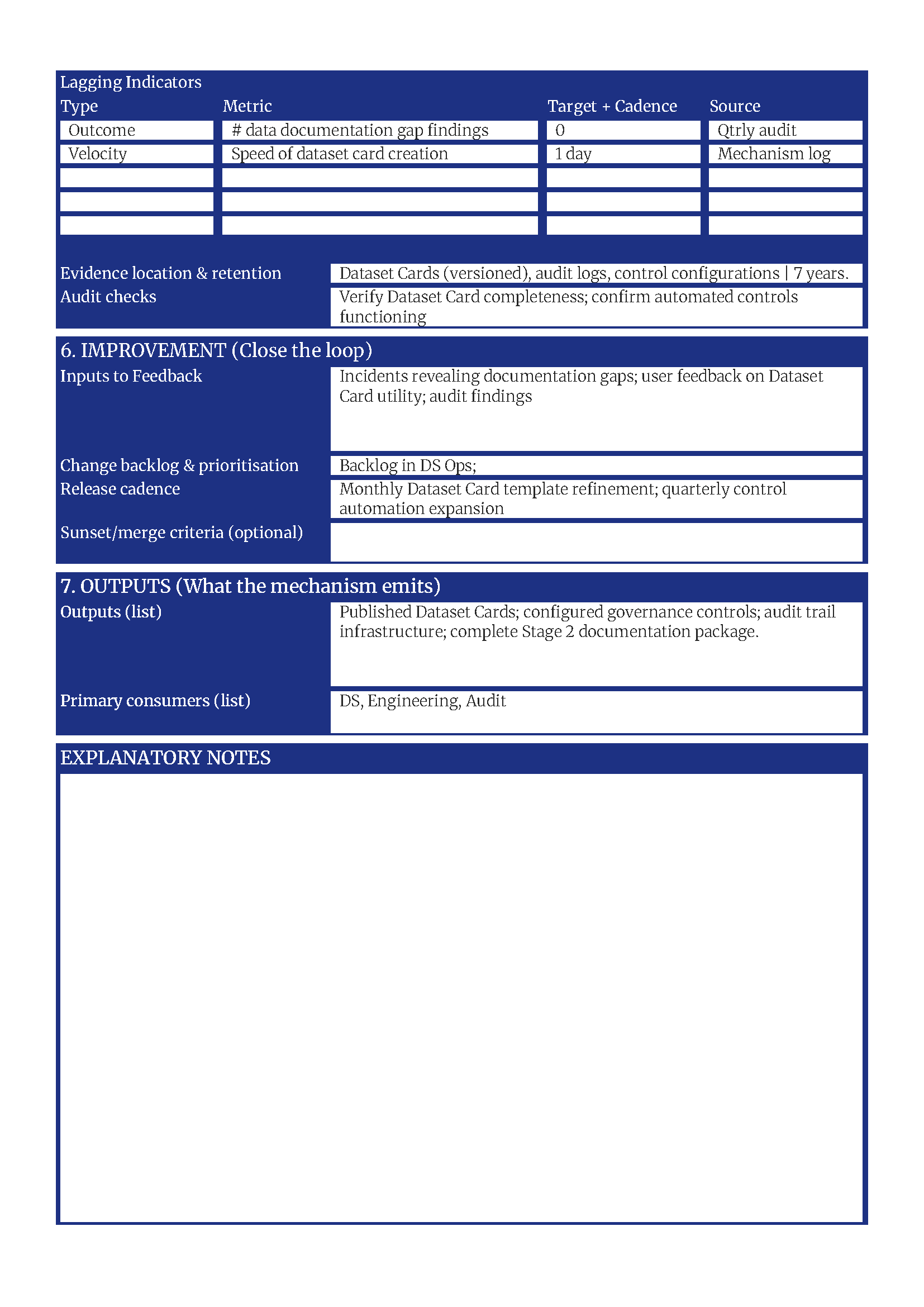

A mechanism is different. It's a closed-loop, adaptive system that builds desired behaviours into the structure of work itself. It takes defined inputs, transforms them through concrete tools and processes, and produces predictable outputs. Most importantly, it continuously improves through feedback loops. This is how some of the most operationally excellent companies in the world, like Amazon, implement real governance at scale.

Write your awesome label here.

A simple representation of a closed-loop adaptive governance mechanism

Think about bias testing as a mechanism rather than a policy requirement. The mechanism makes testing a mandatory gate in the deployment pipeline. It automatically runs standardised tests and blocks deployment until issues are resolved. But here's what makes it adaptive: every failure becomes a lesson. Every workaround reveals a gap. The mechanism improves its test suite based on what it learns from production incidents. It gets stronger over time rather than decaying into bureaucracy.

An example of a Mechanism Card that provides a rapid, formal definition of a governance mechanism like bias assessment.

That's the difference. Policies decay. People forget them, work around them, or just ignore them under pressure. Adaptive mechanisms strengthen. They learn from every interaction and continuously close gaps.

The policies in the course are explicit about this. They assert not just what controls should exist, but what machinery implements those controls and how it improves over time. That's what turns intent into reality.

You can learn how to design and specify adaptive governance mechanisms that span the lifecycle of AI Governance and take advantage of over 30 prepared Mechanism Care templates within Course 3 of the Program.

Writing policies that actually work

The good news is that every one of these mistakes is avoidable.

That's exactly what I teach in the Writing AI Governance Policies course. You'll walk away with policies that avoid these five big mistakes and the dozens of smaller ones I've identified along the way. You can use the Principles Canvas to map your organisation's values into concrete commitments. You'll get fully worked templates for an AI Governance Charter, AI Governance Policy, AI Risk Management Policy, and AI Use Policy, plus a streamlined single AI Policy for smaller organisations that adopt AI tools rather than build them.

Beyond the templates, you'll get section-by-section walkthroughs showing you how to adapt each one to your unique context, your industry, your risk profile, and your organisational culture. And you'll learn to design policies grounded in operational mechanisms capable of adapting to the reality of embedding good governance into how AI actually gets created and used.

This course isn't about copying someone else's policy template. It's about understanding what makes governance work for real, so you can build something that fits your organisation and actually delivers results.

I know from experience that these practices can save AI governance practitioners months of effort and help make sure the good intent of your organisation isn't derailed by avoidable mistakes from the very start.

If you're working in AI governance and you're not already part of our program, check out the course by clicking enrol below. You can watch the first module for free and then decide if you'd like to go ahead.

Or simply read more about the AI Governance Practitioner Program here:

Empty space, drag to resize